Data Scientistsīattling overfitting is given a spotlight because it's more illusory, and more tempting for a rookie to create overfit models when they start with their Machine Learning journey. The right model is one that fits the data in such a way that it performs well predicting values in the training, validation and test set, as well as new instances. Underfit models can't generalize to new data, but they can't model the original training set either. What you end up with is a model that's approaching a fully hard-coded model tailored to a specific dataset. Overfit models perform great on training data, but can't generalize well to new instances. It's true, nobody wants overfitting end models, just like nobody wants underfitting end models. Let me preface the potentially provocative title with: If any glaring mistakes are present in the writing, please let me know. The point of writing this is to promote a discussion on the topic, not to be right or contrarian. “Gradient-based learning applied to document recognition.” Proceedings of the IEEE 86.11 (1998): 2278-2324.Note: These are the musings of a man - flawed and prone to misjudgement. “Deep residual learning for image recognition.” Proceedings of the IEEE conference on computer vision and pattern recognition. “Very deep convolutional networks for large-scale image recognition.” arXiv preprint arXiv:1409.1556 (2014).

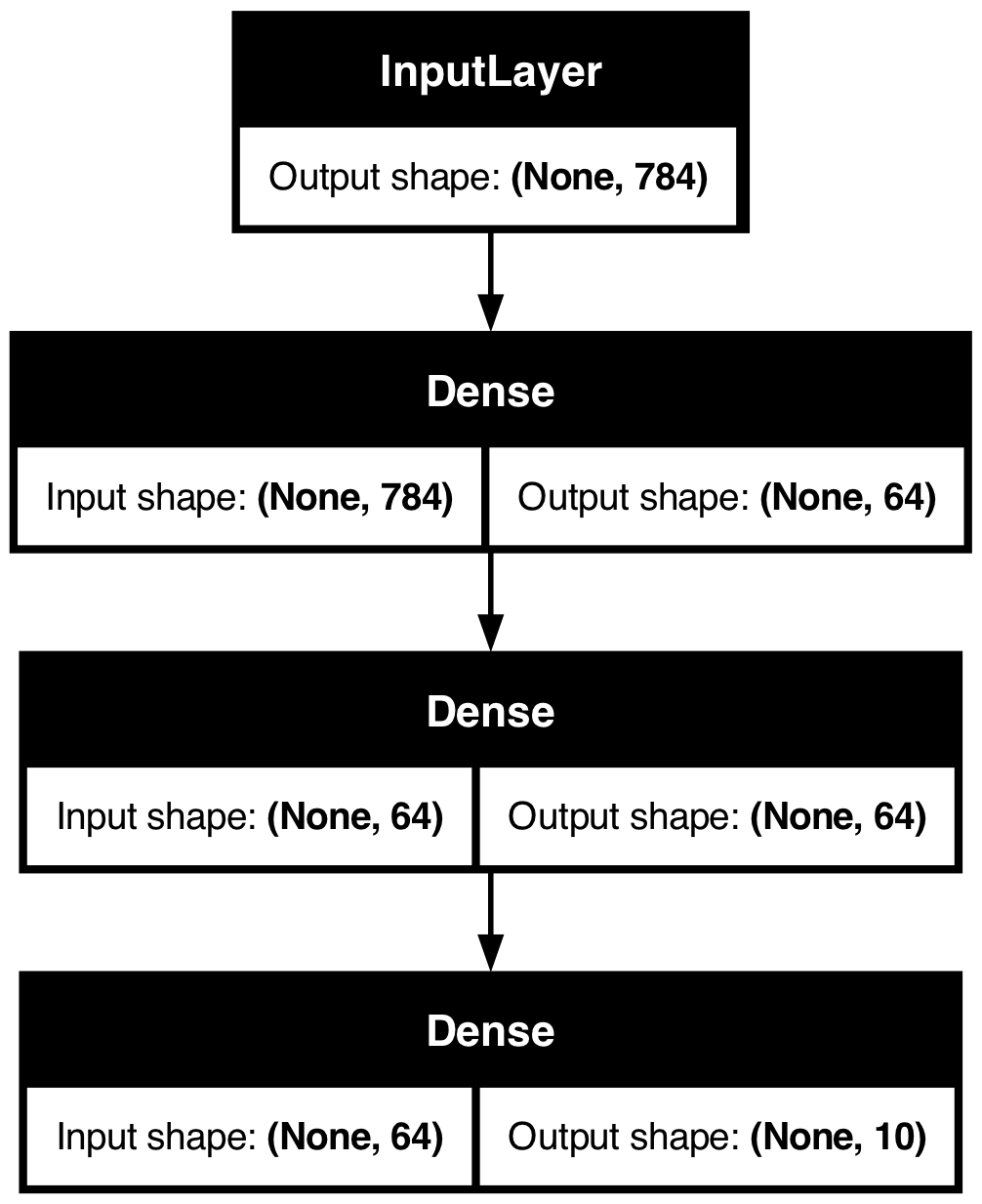

Now lets build our model with all layers discussed above along with Dense or fully connected layers.Ĭonv2d_2 (Conv2D) (None, 24, 24, 64) 18496

Coursera course on Convolutional Neural Networks by Andrew Ng.CS231n: Convolutional Neural Networks for Visual Recognition.you can use below links to understand this topic further:

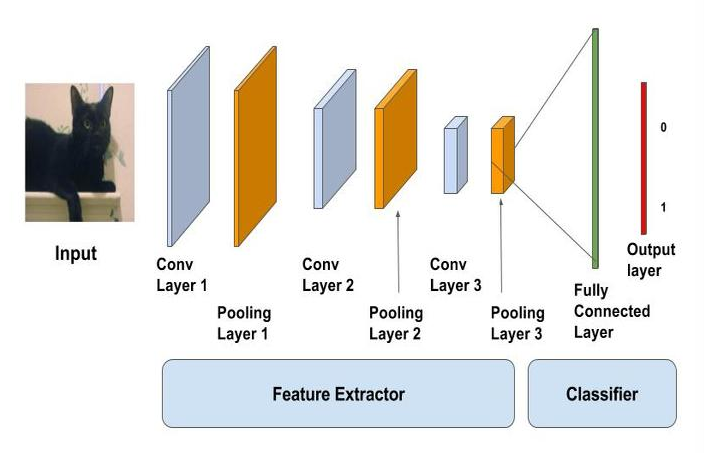

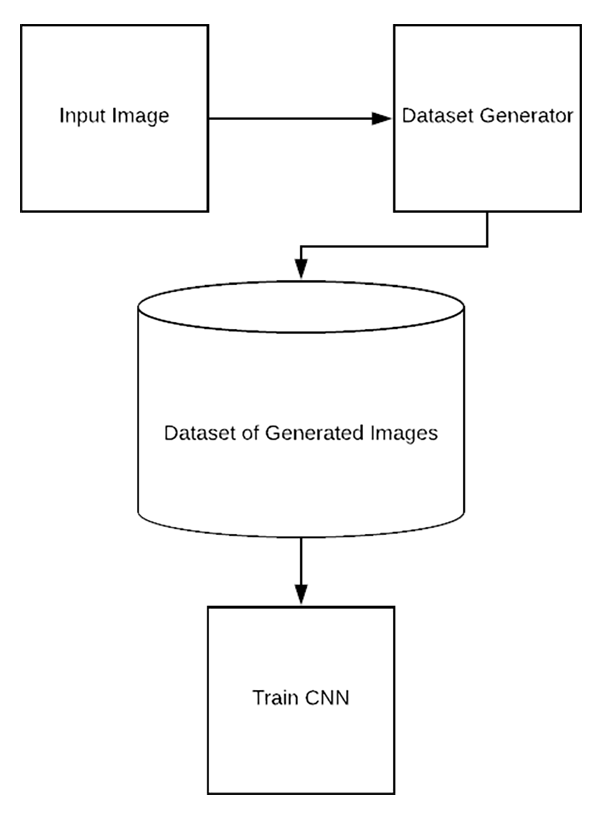

This was a very brief introduction about ConvNet layers. Below is an example of this architecture: Typical CNN Architecture: Combination of all these & fully connected layers results in various ConvNet architectures used today for various computer vision tasks. Eliminating these units at random results in spreading & shrinking of weights. For dropout we go through each layer of network and set some probability of eliminating a node in neural network. So maximum numbers 6,8,3,4 are selected from each 2x2 window from a 4x4 image matrix.ĭropout: Dropout is a regularization technique used in neural networks to prevent overfitting. Max Pooling operation simply find maximum number within sliding filter window over image matrix and return it new matrix as shown below. We will be using Max Pooling in our ConvNet. Pooling is of 2 types: Max Pooling & Average Pooling. Pooling Operation: Along with Convolution layers CNN also use pooling layers to reduce the size of the representation, to speed the computation, as well as make some of the features detected a bit more robust. As shown below as 3x3 filter(yellow) window slides over 5x5 image matrix all values within yellow matrix are added & stored in new Convolved(pink) matrix. Consider an color image of 1000x1000 pixels or 3 million inputs, using a normal neural network with 1000 hidden units in first layer will generate a weight matrix of 3 billion parameters! CNN uses set of Convolution & Pooling operations to deal with this complexity.Ĭonvolution Operation: Convolution operation involves overlapping of a filter/kernal of fixed size over the input image matrix and then sliding across pixel-by-pixel to cover the entire image/matrix. to_categorical ( y_test, num_classes )īefore building the CNN model using keras, lets briefly understand what are CNN & how they work.Ĭonvolutional Neural Networks(CNN) or ConvNet are popular neural network architectures commonly used in Computer Vision problems like Image Classification & Object Detection. to_categorical ( y_train, num_classes ) y_test = keras.

0 kommentar(er)

0 kommentar(er)